Intro

In November 2024, IonQ (a $5bn quantum computing company) and Ansys (a $28bn engineering simulation company) announced they were collaborating to accelerate simulations.

This past Thursday, IonQ announced a “major quantum computing milestone demonstrating quantum computing outperforming classical computing”. Specifically, “by running the application on IonQ’s quantum computers, Ansys was able to speed processing performance by up to 12 percent compared to classical computing in the tests.”

The problem is — they have not done this.

Nevertheless, this news has been widely shared by the media with virtually no analysis. Even the quantum market intelligence sites (GQI & Quantum Insider) haven't really got it and/or have simply copied the above quote, without quotation marks, passing it on as fact.

Usually there’s a bit of chatter online when something happens like this, but I haven’t seen much, so I’ve decided to type this post up myself. I firmly believe we should call out companies who cry wolf, so we can get truly excited when the real results come along.

TLDR

IonQ and Ansys compared the runtime of a classical simulation used in production by Ansys (LS-DYNA), using Ansys’ standard algorithm versus a quantum algorithm for an important step.

On the surface, they showed that for one out of the three problems investigated, they achieved a 11.5% faster runtime than the production-scale techniques used by Ansys. However…

They ran this on a classical computer, not a quantum computer.

For that one problem, they artificially changed the simulation conditions, which led to the speed up. The other two use cases, with no ‘refining’, did not see any speed up.

IonQ then ran the quantum algorithm on their quantum computers, and showed slightly worse performance, but ‘comparable’, as they say. They did not mention the run time at all!

IonQ then reported that “By running the application on IonQ’s quantum computers, Ansys was able to speed processing performance by up to 12 percent compared to classical computing in the tests.”

…I have no words. They have not demonstrated any speed up relative to Ansys’ normal use of the simulations in production on a quantum simulator (classical computer). Even worse, they have not demonstrated any speed up… at all… on a quantum computer, which is what they are reporting.

What was this all about?

LS-DYNA is a software developed by Ansys, used for simulating complex, real-world physical behaviours in engineering, including:

Crash testing

Manufacturing processes

Structural mechanics

Fluid-structure interactions

Bioengineering applications

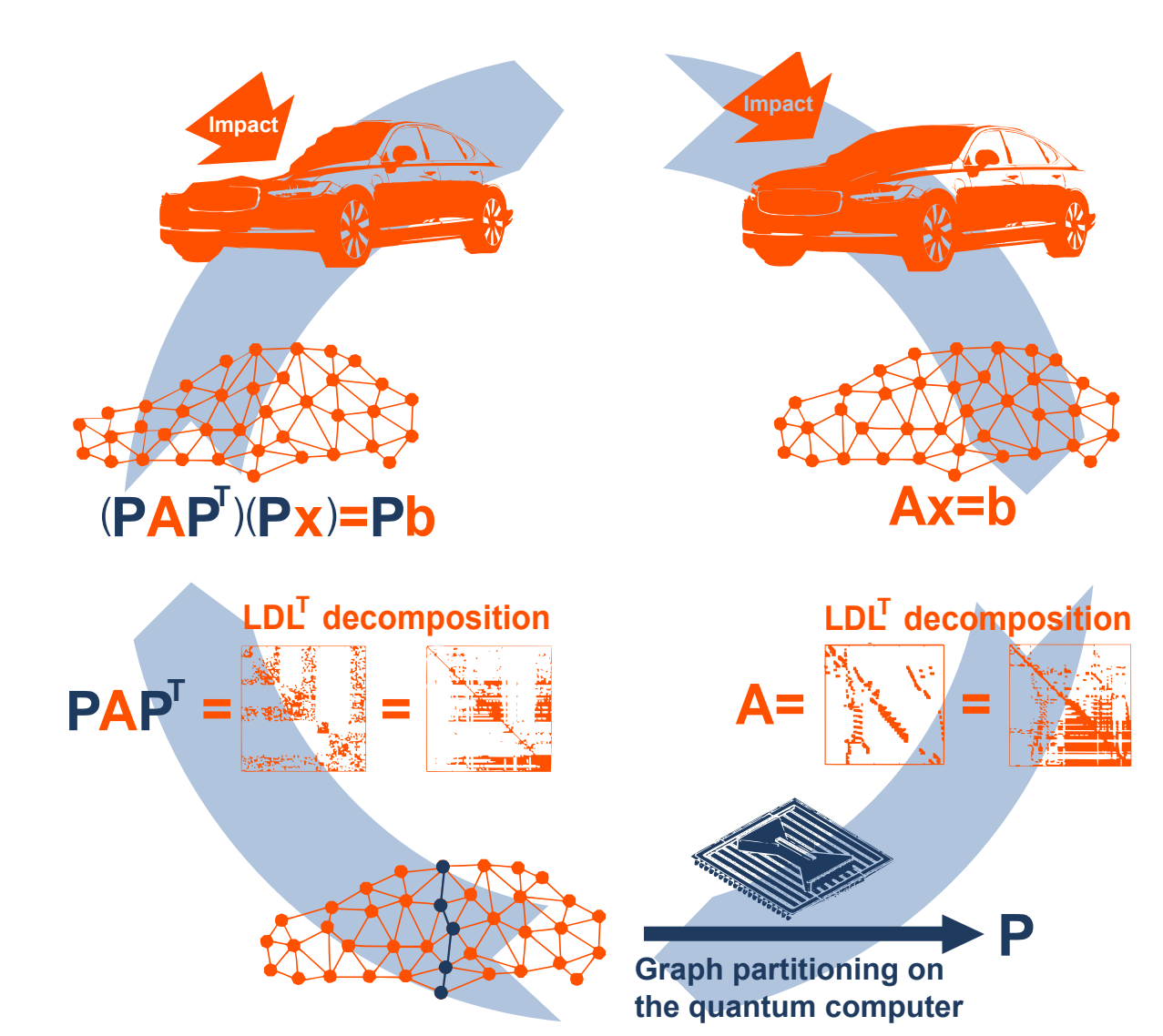

The software generates a mesh, which is then evolved according to physical equations over multiple timesteps to form a simulation (e.g. below, the mesh is deformed when simulating a car crash.)

The software needs to parallelise the work across multiple processors, so it divides the mesh into equal chunks, in a way that increases the speed to solution. You can see above that they partition the car mesh into two, along the blue line. This is a Graph Partitioning Problem — a key pre-processing step in large-scale simulations.

IonQ, in collaboration with Ansys, used a quantum algorithm VarQITE (Variational Quantum Imaginary Time Evolution) as a ‘partitioner’. They created a pipeline where graph partitions generated by VarQITE were plugged into LS-DYNA simulations. A better partitioner should lead to a faster simulation.

These simulations included 3 real-world engineering problems:

Vehicle roof crush

Blood pump fluid dynamics

Vibration analysis

How does the software normally work?

Ansys creates a mesh with hundreds of thousands of vertices. They then coarsen this mesh to a 10,000 node graph, and use a ‘partitioner’ in order to figure out the optimal strategy to partition the mesh. The original mesh (which can be encoded as a matrix) is then partitioned using this strategy. This restructures the matrix representation. We can then solve a linear system, containing this matrix and known external forces, to find how each point in the mesh moves. We iterate this over multiple timesteps and thus develop a simulation of e.g. a car crash.

What did IonQ and Ansys demonstrate?

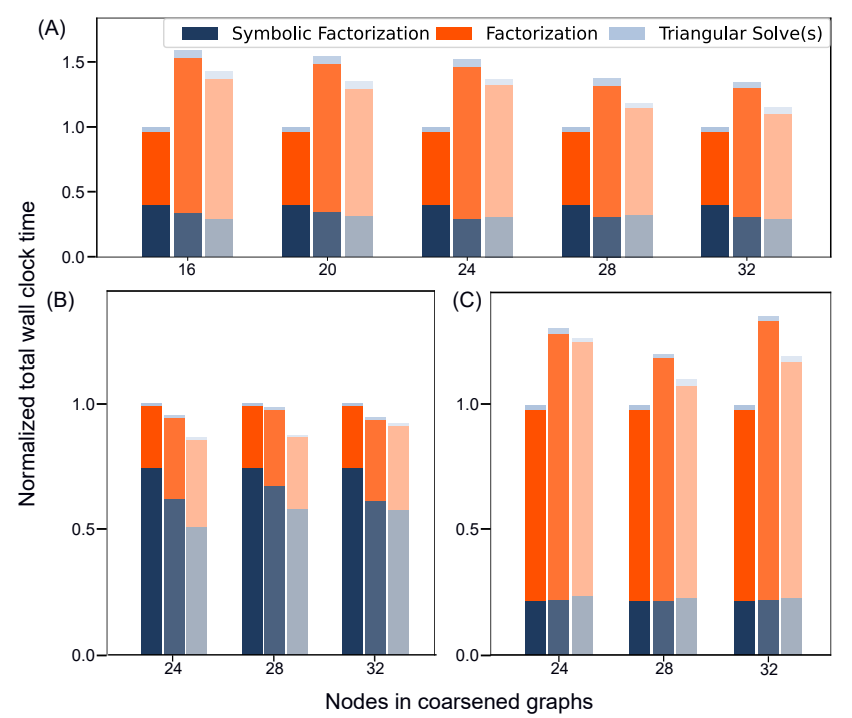

In their paper, they compare the runtimes of solving the system after the partitioning has happened. The better the partitioner performs, the faster the system should be solved. In the graph below, they compare a baseline (Ansys’ standard partitioner with typical production settings) with the VarQITE 'quantum’ partitioner. For each group of 3 bars:

We’re looking to compare the left bars with the right bars for each group. As you can see, only for those in (B), the ‘Blood Pump’ use case, did they see any advantage.

“The differences of the left to the right bar in panel (B) are, reading from left to right, −11.93%,−11.51%, and−9.80%. This means that the quantum solution improved over the industry-grade heuristic by close to 12%”.

So IonQ is right! They were able to speed processing performance by up to 12% compared to classical computing by running the application on IonQ’s quantum computers. Well…no. Everything here was run on a classical computer, so for this result VarQITE was not run on IonQ’s quantum computers. Additionally, all is not what it seems…

Trickery or nuances?

Just below the graph, the authors say “For these experiments, we refined the mesh of the BloodPump problem by making it 100x more dense so the factorization time was meaningful enough to measure improvements.” So the 12% runtime improvement for BloodPump wasn’t compared to a typical Ansys simulation.

For BloodPump, Ansys normally uses a mesh with 200,000 vertices and 3.5M edges. In this paper, they increased “the number of vertices to 2.6 million and the number of edges to 40 million” for the BloodPump mesh. Why is this important?

It is important because the runtime improvement is derived from this increase in mesh size. They are artificially providing more structure to the coarse graph. A larger full mesh ⇒ better coarsened graph ⇒ better partitioning, even with the same partitioner. You can see this in the figure above — only in BloodPump does the middle bar have a faster runtime than the left bar (the left and middle bars use the same internal Ansys partitioner). By changing the mesh size, they are adding another variable with many potential implications for parallel efficiency, fill-in sensitivity etc. It is not what Ansys uses in production, and so not directly comparable.

It is true that in all cases, the VarQITE partitioner works better than the original Ansys partitioner when applied to the same number of nodes. But you can only say you are better than classical computing when you use the same conditions — and from use cases (A) and (C), they see worse run times.

But what about quantum hardware?

IonQ does not even discuss the runtime when executing the VarQITE algorithm on their quantum hardware. They discuss algorithm convergence, error rates and merit factors (arguing that it is “comparable” — it is slightly worse if you look at the paper — to the classical compute-run simulations) but there is no mention at all of how long the QPU runs took or how long the linear system solve took following VarQITE run on a QPU. I can only assume that the worse performance of VarQITE run on a QPU versus a quantum simulator led to a significantly longer runtime for the solver.

Conclusion

I would normally blame a company’s media team for spreading inaccurate information, however some does lie with the authors here — the abstract says

“We find that the wall clock time of the heuristics solutions used in the industry-grade solver is improved by up to 12%. We also execute the VarQITE algorithm on quantum hardware (IonQ Aria and IonQ Forte) and find that the results are comparable to noiseless and noisy simulations.”

Firstly, the authors leave out the very important nuance of saying that the runtime is improved by 12% — but only when the simulation conditions are changed beyond industry-grade.

Secondly, by saying ‘results’ without any qualification such as performance or merit factors, the abstract implies that the quantum hardware has comparable wall clock times, given that this is mentioned in the previous sentence.

We all understand why IonQ has done this — they are a publicly traded company wanting to keep relevant by pushing out ‘major milestones’. However, it is bad practice and will dilute the impact of the genuine milestones that will come in the next 2-3 years, confusing the public and investors.

I’m not sure if this is misleading or just lazy writing and poor communication, I’ll leave that to you. Hopefully this is challenged before going into a peer-reviewed journal, although given the Microsoft paper, who knows? — I’ll do a post soon about Microsoft’s announcements and this week’s APS meeting.

Please reach out to me if you have any comments, refutations, or suggestions. I’d be happy to make changes.

Strong convictions, loosely held.